This article is more than

1 year oldLinkedIn is using Aussie users’ data to train AI models, unless they change their settings to opt out.

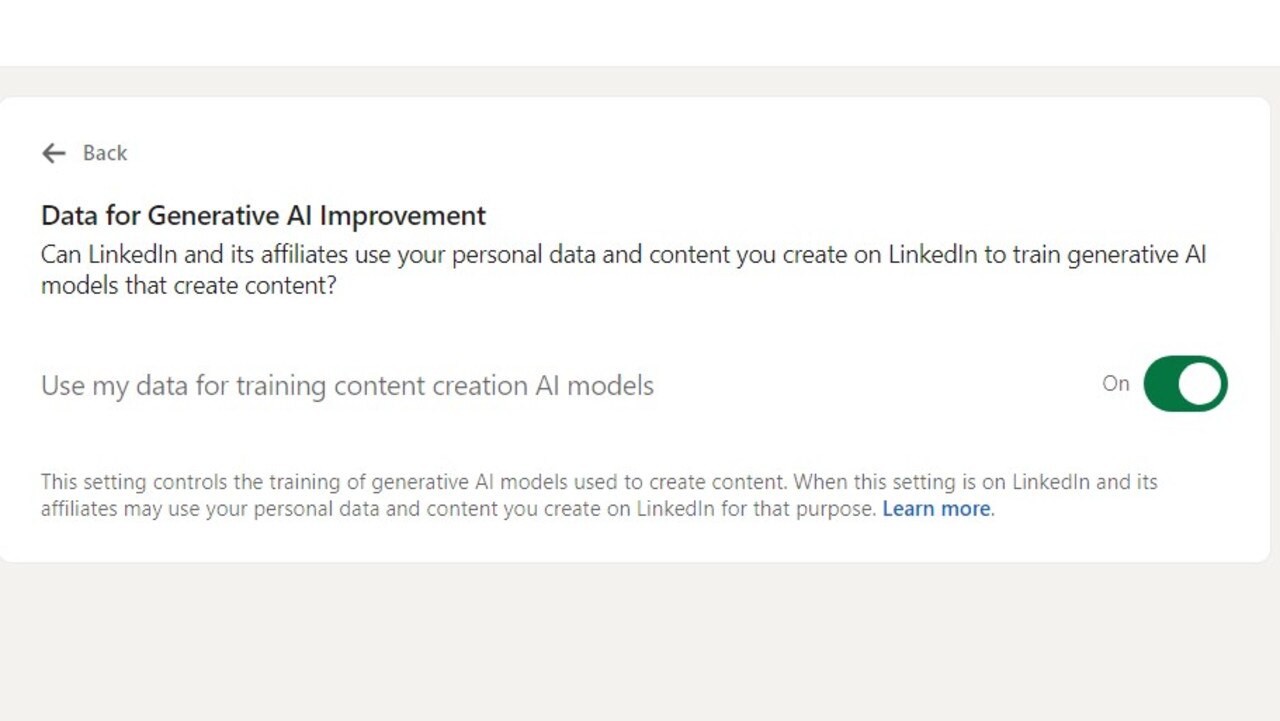

A setting called ‘Data for Generative AI Improvement’ has been automatically switched on for users outside the EU, EEA, UK or Switzerland, giving permission for LinkedIn and unnamed “affiliates” to “use your personal data and content you create” on the social network “for training content creation AI models”.

This can include using a person’s profile data or content in posts.

While users can switch off the default setting if they know about it, that will only stop the company and its affiliates from using your personal data or content to train models going forward, but will not affect training that has already been done.

It was only last week, Meta admitted to scraping Australian adult users’ public data – like photos and posts on Facebook and Instagram dating back to 2007 – to train its generative AI models.

Meta’s privacy policy director Melinda Claybaugh appeared before an inquiry where she confirmed the process when pressed by senators.

Australian users have not been given the option to opt-out like users in Europe, where regulation is tougher.

Unless an Australian user had consciously set posts to private, the company scraped the data.

“Users can choose to opt out manually, but how will they know to do so if they didn’t see the video LinkedIn posted explaining the new ‘feature?’” he said.

“This showcases the dark side of big tech. While users can opt-out, the setting is enabled by default, which raises concerns about informed consent.

“The absence of a proactive opt-in is a typical example of how big tech leverages user apathy or a lack of awareness to further its AI initiatives.

“This move exemplifies the growing ethical concerns surrounding how tech giants use personal data, often without users’ explicit knowledge, to fuel AI advancements.”

How LinkedIn uses your data to train AI

LinkedIn, which is owned by Microsoft, says it uses generative AI models for “a variety of purposes,” including its AI-powered writing assistant to draft messages.

These AI models may be trained by LinkedIn or another provider, like Microsoft’s Azure OpenAI service.

Greg Snapper, a spokesman for the company, told Forbes “we are not sending data back to OpenAI for them to train their models.” OpenAI is famously the creator of chatbot ChatGPT.

When users click to “learn more” about the ‘Data for Generative AI Improvement’ setting, LinkedIn explains: “This setting applies to training and finetuning generative AI models that are used to generate content (e.g. suggested posts or messages) and does not apply to LinkedIn’s or its affiliates’ development of AI models used for other purposes, such as models used to personalise your LinkedIn experience or models used for security, trust, or anti-abuse purposes.”

Elsewhere in its generative AI FAQs, LinkedIn claims it will “seek to minimise personal data in the data sets used to train the models” including using technology to redact or remove personal data from the training dataset.

US accuses social media giants of ‘vast surveillance’

The US Federal Trade Commission said this week that a years-long study showed social media titans have engaged in “vast surveillance” to make money from people’s personal information.

A report based on queries launched nearly four years ago aimed at nine companies found they collected troves of data, sometimes through data brokers, and could indefinitely retain the information collected about users and non-users of their platforms.

“The report lays out how social media and video streaming companies harvest an enormous amount of Americans’ personal data and monetise it to the tune of billions of dollars a year,” FTC chair Lina Khan said.

“Several firms’ failure to adequately protect kids and teens online is especially troubling.”

Ms Khan contended that the surveillance practices endangered people’s privacy and exposed them to the potential of identity theft or stalking.

The findings were based on answers to orders sent in late 2020 to companies including Meta, YouTube, Snap, Twitch-owner Amazon, TikTok parent company ByteDance, and X, formerly known as Twitter.

UN experts’ AI warn warning

Also this week, United Nations experts cautioned that the development of artificial intelligence should not be guided by market forces alone.

They held back from suggesting the formation of a muscular worldwide governing body to oversee the rollout and evolution of the technology.

The panel of around 40 experts from the fields of technology, law and data protection was established by UN Secretary-General Antonio Guterres in October.

Their report raises alarm over the lack of global governance of AI as well as the exclusion of developing countries from debates surrounding the technology.

“There is, today, a global governance deficit with respect to AI,” which by its nature is cross-border, the experts warned in their report.

“AI must serve humanity equitably and safely,” Mr Guterres said this week.

“Left unchecked, the dangers posed by artificial intelligence could have serious implications for democracy, peace and stability.”

Against the backdrop of his clarion call, the experts called on UN members to put in place mechanisms to ease global co-operation on the issue, as well as to prevent unintended proliferation.

“The development, deployment and use of such a technology cannot be left to the whims of markets alone,” the report said.

– with AFP