This article is more than

2 year oldANALYSIS

It’s not about if it’s real or not. It’s about wanting it to be true. That’s why US presidential hopeful Donald Trump shared a picture of himself in a church. Praying. Devoutly. With six-fingered hands.

An unfortunate mutation he’s been hiding from the public all along?

Or evidence the image was artificially generated by a machine that doesn’t understand human anatomy?

Trump was paving the way to an all-important Republican Party presidential primary last weekend – a vote by the party faithful to choose who their next candidate will be – when he reposted and liked the idyllic image on his TruthSocial feed.

TruthSocial is the social media platform he and his followers established after being booted from the likes of Facebook and Twitter for hate speech, disinformation and inciteful behaviour during past campaigning efforts.

The picture’s source was full of patriotic fervour. “Patriot4Life” (with the handle 1776WeThePeople1776 referring to the US War of Independence – not the failed US Civil War of 1861).

The subject was humble and devout: Trump basked in the golden glow of leadlight windows while praying alone in an empty church.

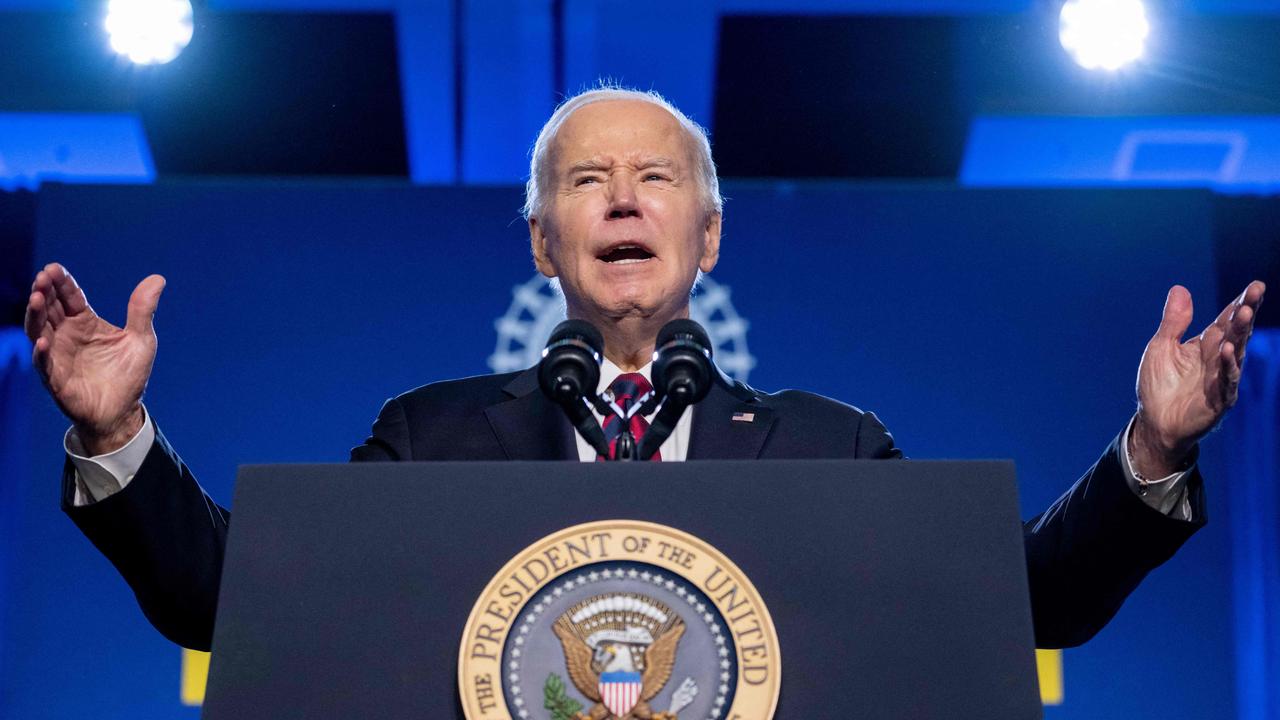

Meanwhile, in New Hampshire, “President Joe Biden” was personally phoning constituents in crucial electorates to discourage them from voting. But it was an AI-controlled chatbot mimicking his voice. And that voice is being advertised for sale online by commercial text-to-speech service providers.

“With the assistance of AI, the process of generating misleading news headlines can be automated and weaponised with minimal human intervention,” says University of Cambridge social psychologist Professor Sander van der Linden.

“My prediction for 2024 is that AI-generated misinformation will be coming to an election near you, and you likely won’t even realise it.”

Have a little faith

Trump is not a regular churchgoer.

But his core constituency is America’s white Christian far right.

Iowa-based Pastor Paul Figie proclaimed Trump to be “ordained by God” before one rally, describing him as a “martyr” of the US legal and justice systems.

And Patriot4Life was trying to provide the evidence demanded for such a holy anointing.

Trump has embraced the power of faith.

He recently shared on TruthSocial a three-minute video declaring, “On June 14, 1946, God looked down on his planned paradise and said, ‘I need a caretaker,’ so God gave us Trump”.

Earlier, the Republican Party had released an AI-generated video showing an apocalyptic America led by a demonic-faced Biden.

The faith-based angle worked. Trump overwhelmed his key Iowa opponent, Florida Governor DeSantis, in the poll – causing him to drop out of the race.

Matters of faith are intangible. That’s the product of an individual’s relationship with their personal choice of almighty.

Photos and speech are tangible. And while clumsy AI currently leaves plenty of clues in artificial imagery, simulated voices are already successfully scamming parents out of money they thought their children were begging for in voicemail messages.

Reality as perception

Last year, a false report of the Pentagon being bombed sent shockwaves through the US. Only a lack of follow-up evidence managed to calm believers – many of whom were trigger-happy stockbrokers.

DeSantis fed a false image of Trump hugging Dr Anthony Fauci – derided by many Republicans for his role in combating the Covid-19 pandemic – into his presidential campaign feeds.

And even a faked picture of Osama bin Laden sitting in on a Pentagon meeting called in response to the September 11, 2001, attacks revived the online fervour of “false flag” conspiracy theorists earlier this month.

“By mixing real and AI-generated images, politicians can blur the lines between fact and fiction – and use AI to boost their political attacks,” says van der Linden.

During the heated 2020 US Presidential elections, social media firms such as Facebook, Twitter and YouTube enacted strict new standards to contain false and hate-based content.

According to recent research, Facebook and YouTube have since severely curtailed those requirements. Twitter (now X) has all but abandoned them.

“This has fuelled a toxic online environment that is vulnerable to exploitation from anti-democracy forces, white supremacists and other bad actors,” says University of Oxford dean Professor Ngaire Woods.

And women are among the most heavily affected, she adds.

“A major reason is the disproportionate amount of abuse female politicians and candidates receive online, including threats of rape and violence. The rise of artificial intelligence, which can be used to create sexually explicit deepfakes, is only compounding the problem.”

When truth decays

Ray Block, who leads the RAND Corporation “Truth Decay” research unit, says even blatantly fake news and imagery serve to weaken the influence of real facts and considered analysis on public life.

“Truth Decay covers disinformation, propaganda, and deepfakes. It covers all of the emotional triggers that information can have,” he says. “It’s the talking heads on TV, the distrust and conspiracy theories, the basic disagreements over not just what’s right, but what’s real.”

Free speech is difficult to regulate.

For the US, the challenge is finding a balance between freedom of expression, the need for truth in a representative democracy, and demands for loyalty.

Microsoft late last year accused the Chinese Communist Party of operating a complex array of social media accounts using AI-generated fake news reports to influence US voters. US intelligence agencies have made similar accusations against Russia and Iran.

But the rules applying to US citizens are virtually non-existent.

“Sometimes politicians or government leaders tell you things that aren’t 100 per cent true,” says Block. “So you can argue that truth is fundamental to democracy. How do you reconcile those two things? What is the truth that we’re trying to preserve?”

Is politics simply a matter of faith in a chosen leader?

“Disinformation often serves the dual purpose of making the originator look good, and their opponents look bad,” argues Louisiana State University social psychologist Colleen Sinclair. “Disinformation takes this further by painting issues as a battle between good and evil, using accusations of evilness to legitimatize violence …

“(But) you often look into the things you buy rather than taking the advertising at face value before you hand over your money. This should also go for what information you buy into.”

Jamie Seidel is a freelance writer | @JamieSeidel