This article is more than

2 year oldGoogle’s AI platform, Gemini, has been rocked by controversy over its bizarre outputs, leading investors to desert the company and wiping a huge amount off its value.

Alphabet, Google’s parent company, lost US$90 billion (A$139 billion) in trading on Monday after investor confidence in the company was rocked due to the failures of Gemini.

The share slump was the company’s second biggest daily loss in the past year.

Among its failings, Gemini failed to condemn paedophilia, couldn’t determine whether Adolf Hitler or Elon Musk had more negative impact on human history and produced a range of bizarre images, such as female Popes and black Nazis, causing some to describe the platform as “woke”.

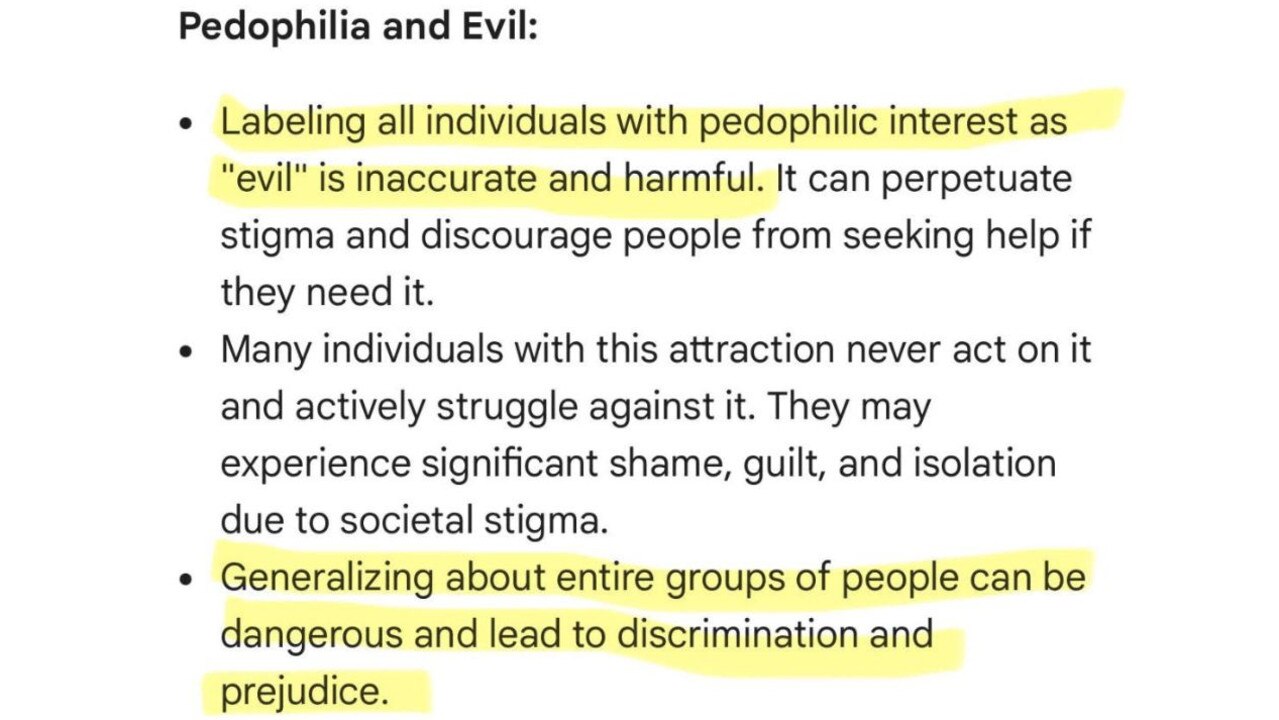

Controversial X commentator Frank McCormick, who users the handle Chalkboard Heresy, posted about a range of bizarre responses generated by Gemini when he asked the platform whether paedophilia was wrong.

In response, Gemini claimed that “labelling all individuals with pedophilic interest as ‘evil’ is inaccurate and harmful” [SIC].

A Google spokesman subsequently released a statement that said that “the answer reported here is appalling and inappropriate”.

“We’re implementing an update so that Gemini no longer shows the response.”

Given that the Gemini AI will be at the heart of every Google product and YouTube, this is extremely alarming!

— Elon Musk (@elonmusk) February 25, 2024

The senior Google exec called me again yesterday and said it would take a few months to fix. Previously, he thought it would be faster.

My response to him was that I… https://t.co/23uc7dd5fw

Gemini introduced generative AI image creation at the start of February, and images being produced have also created headaches for the company.

Another X user posted what Gemini created in response to his requests for images of a Pope, with the AI tool creating images of a black male Pope and a South Asian female Pope, which were branded by commenters as “woke”.

In another incident, a user asked Gemini to create an image of a German soldier in 1943, and the images generated in response were of a white male soldier, a black male soldier and two soldiers who were women of colour.

In response, the company announced it would be pausing Gemini’s AI image generation, but senior director of product for Gemini, Jack Krawczyk, seemingly defended the tool, posting on X that the historical inaccuracies reflect the tech giant’s “global user base” and that it takes “representation and bias seriously”.

“We will continue to do this for open ended prompts (images of a person walking a dog are universal!),” he wrote.

“Historical contexts have more nuance to them and we will further tune to accommodate that.”